2.2 Key framework: Understanding confidence in Artificial Intelligence (AI)

Chapter 2: Key Concepts and FrameworkAn overview of the key framework of understanding what influences confidence in artificial intelligence (AI) within health and care setting.

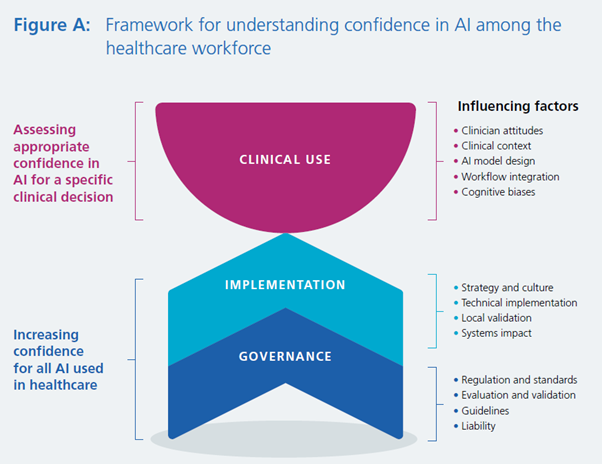

This report presents a framework for understanding what influences confidence in AI within health and care settings, which was developed following analysis of the academic literature and the interviews conducted for this research.

Establishing confidence in AI can be conceptualised as the following.

- Increasing confidence in AI by establishing its trustworthiness (applies to all AI used in healthcare).

- Trustworthiness can be established through the governance of AI technologies, which conveys adherence to standards and best practice, and suggests readiness for implementation.

- Trustworthiness can also be established through the robust evaluation and implementation of AI technologies in health and care settings.

- Increasing confidence is desirable in this context.

- Assessing appropriate confidence in AI (applies only to AI used for clinical decision making).

- During clinical decision making, clinicians should determine appropriate confidence in AI-derived information and balance this with other sources of clinical information.

- Appropriate confidence in AI-derived information will vary depending on the technology and the clinical context.

- High confidence is not always desirable in this context. For example, it may be entirely reasonable to consider a specific AI technology as trustworthy, but for the appropriate confidence in a particular prediction from that technology to be low because it contradicts strong clinical evidence or because the AI is being used in an unusual clinical situation. The challenge is to enable users to make context-dependent value judgements and continuously ascertain the appropriate level of confidence in AI-derived information.

Figure A illustrates the conceptual framework and lists corresponding factors that influence confidence in AI. These comprise factors that relate to governance and implementation, which can establish a system’s trustworthiness and increase confidence. Clinical use factors affect the assessment of confidence during clinical decision making on a case-by-case basis.

Chapters 3, 4 and 5 explore the factors in detail, including how these are supported by current initiatives and guidance.

The primary focus of this report is understanding and assessing appropriate levels of confidence in AI-derived information during clinical use. However, as appropriate confidence in AI used in clinical decision making is premised on establishing the trustworthiness of these technologies, the key elements of governance and implementation that underpin confidence are addressed first before clinical use is discussed in more detail.

Governance

Increasing confidence through the governance of AI technologies

How AI technologies are governed can influence confidence in these technologies.

Formal means of governance and oversight can increase confidence in AI among workers who plan, implement and use AI for any task in health and care settings. These can include robust regulatory frameworks and standards for AI, clear evaluation and validation approaches, clinical and technical guidelines, and clarity on liability across different AI technologies.

Implementation

Increasing confidence through the robust implementation of AI technologies

The safe, effective, and ethical implementation of AI in health and care settings are key contributors to confidence in AI technologies among the workforce.

The leadership, management and governance bodies within health and care settings can support such implementation by establishing AI as a strategic asset, such as through developing business cases, and maintaining organisational cultures conducive to innovation, collaboration, and public engagement (Section 2.4 discusses the importance of developing confidence in AI among patients).

Addressing any challenges with information technology infrastructures, interoperability, and data governance requirements is also crucial. Establishing and agreeing on related information technology and governance arrangements are instrumental to healthcare workers’ confidence in using AI technologies.

Procurement or commissioning entities within health and care settings will need to decide whether to validate the performance of AI technologies to ensure its performance translates to local data, patient populations and clinical scenarios. In such cases, evaluation using local data and workflows will be a necessary step to the robust implementation of AI technologies.

Healthcare workers will be more confident in AI technologies that are safely and efficiently integrated into existing workflow systems, including through established pathways for reporting safety events.

Clinical use

Assessing appropriate confidence in AI-derived information during clinical decision making

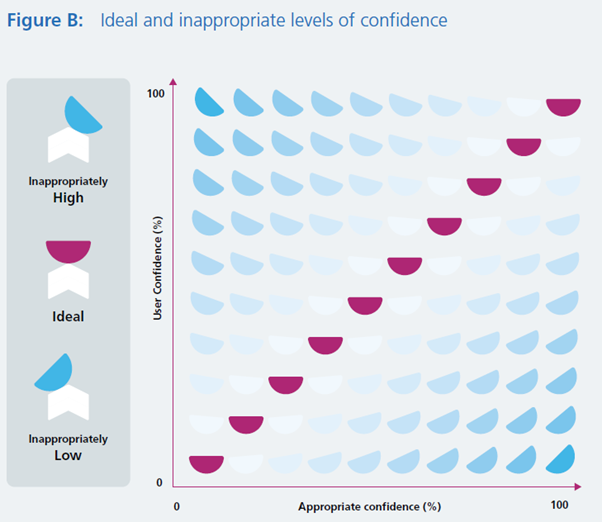

Figure B shows that a clinician’s actual confidence (user confidence) in AI-derived information for decision making may be inappropriately high or low if it does not match (along the ‘ideal’ line) the appropriate level for a clinical case. As discussed in this section, this appropriate level is likely to vary from case to case, depending on clinical factors and the AI-derived information itself.

To avoid inappropriately high or low levels of confidence, clinicians need to determine the appropriate level of confidence in the specific AI-derived information available at the point of making each clinical decision.

A complex set of considerations can dictate how to determine an appropriate level of confidence in AI-derived information, depending both on the technology and the clinical scenario, and with certain AI technologies and use-cases presenting lower clinical or organisational risks.

Synthesising and evaluating information from many disparate sources are key skills in clinical decision making, whether for diagnosis, prognostication, or treatment. If incorporated and considered with appropriate confidence, information from AI technologies has the potential to make clinical decision making safer, more effective, and more efficient.

To achieve this, clinicians will need to understand when AI-derived information should and should not be relied upon, and how to modify their decision making process to accommodate and best utilise this information. This might include considering factors like:

- other sources of clinical information and how to balance these with AI-derived information in decision making

- the clinical case for which the AI is being used

- the intended use of the AI technology

Several factors can influence how clinicians view AI-derived information (their user confidence), potentially leading to inappropriately high or low levels of confidence. These include the following.

- Clinicians’ personal experiences and attitudes. General digital literacy, familiarity with technologies and computer systems in the workplace, and past experiences with AI or other innovations can influence assessments of confidence in AI-derived information.

- Clinical context including the level of clinical risk and the degree of human oversight in the AI decision making workflow

- Characteristics of AI model design. Various design characteristics can influence confidence in AI technologies. For example, the way AI predictions are presented (such as diagnoses, risk scores, or stratification recommendations) can affect how clinicians process information and potentially influence their ability to establish appropriate confidence in AI-derived information.

- Cognitive biases, including automation bias, aversion bias, alert fatigue, confirmation bias and rejection bias can affect AI-assisted decision making. The propensity towards these biases may be affected by choices made about the point of integration of AI information into the decision making workflow, or the way such information is presented. Interviewees for this research highlighted that enabling clinicians to recognise their inherent biases and understand how these affect their use of AI-derived information should be a key focus of related training and education. Failure to do so may lead to unnecessary clinical risk or the diminished patient benefit from AI technologies in healthcare.

Clinicians will need to understand when AI-derived information should and should not be relied upon, and how to modify their decision making process to accommodate and best utilise this information. Awareness of how their own attitudes and cognitive biases, the clinical context, and AI technical features can influence how they use AI-derived information will be crucial to ensure appropriate levels of confidence.

Page last reviewed: 12 April 2023

Next review due: 12 April 2024